KEEP UP WITH OUR DAILY AND WEEKLY NEWSLETTERS

happening now! partnering with antonio citterio, AXOR presents three bathroom concepts that are not merely places of function, but destinations in themselves — sanctuaries of style, context, and personal expression.

developed by tomasz patan, the mastermind behind Jetson ONE, the personal hoverbike levitates and glides in the air with ease.

connections: +290

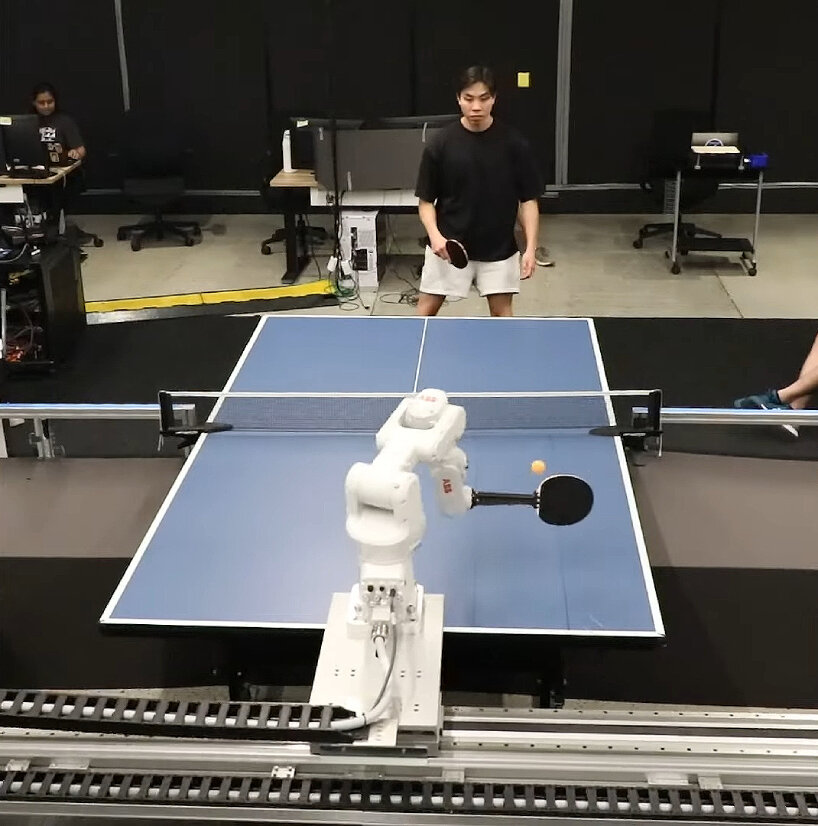

explore the series of high-tech toys and devices designed with modern features for adults.

its external body features abstract semi-circles in white and gray over a warm gray base, subtly evoking the ripples and waves of flowing water.

connections: +430

designboom interviews chief design officer gorden wagener to discuss the ‘frunk’, AI agents that detect the driver’s moods, and more.