Artificial intelligence, architecture and design

Artificial intelligence is dramatically transforming the nature of creative processes, with computers already playing key roles in countless fields including architecture, design, and fine arts. Indeed, the advent of text-to-image generating software such as DALL-E 2, Imagen, and Midjourney, shows that AI machines and computers are already being used as brushes and canvases. While some choose to focus on the benefits of artificial intelligence, like the increased speed and efficiency it offers, others worry that it will eventually replace designers as a whole. In any case, one thing is certain: AI is here to stay, and it will radically impact the way we create — how it will do so remains to be seen.

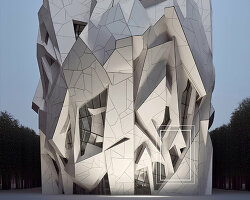

Kory Bieg, Principal at architecture, design, and research office OTA+ and Program Director UT Austin School of Architecture, is one of the many creatives that have experimented with AI generative tools. Mainly using Midjourney, Bieg has been producing architectural designs that have taken over Instagram with their striking visuals. To learn more about text-to-image AI generators, Midjourney, and the future of AI, designboom spoke with Kory Bieg. Read the interview in full below.

camouflage series

all images courtesy of Kory Bieg | @kory_bieg

interview with Kory Bieg

designboom (DB): How would you describe Midjourney to someone studying/practicing architecture who has never used it?

Kory Bieg (KB): Midjourney is a text-to-image Artificial Intelligence that uses your text (called a prompt) to construct an image of anything you want; buildings, landscapes, people, machines, really, anything. It pulls from a huge database of pictures that are tagged with a bunch of parameters, like object names, style, and even the type of view or whether it’s day, night, or raining — whatever you can think of to describe an image. Once you submit your prompt, Midjourney searches its database to find pictures that match your text, and then combines the relevant parts into a collage-like cloud of pixels that it thinks represents what you are after. You are given four images with the option to run it again, or vary any of the images to produce four new options. You can also upscale any or all of the images, which increases the resolution of the images while also adding detail. You continue to vary and upscale images until you are happy with the results.

camouflage series

DB: Can you describe how you create parameters, visual vocabularies? Do you edit and refine the result afterwards? What does this process look like?

KB: AI is amazing at constructing images in almost any style you choose. You can draw portraits in the style of Vincent Van Gogh and design buildings in the style of Zaha Hadid. And that is why I completely avoid the use of style descriptors in all of my prompts! If you are interested in emulating the style of someone else, I have found it to be much more productive and interesting to use words that someone like Van Gogh or Hadid might use to describe their own work. Words like ‘organic’ will start to produce results that might have the same qualitative elements as a project designed by Hadid, while not limiting the database of pictures from which the AI is pulling by using her name as a descriptor. By describing your desired image in more generic terms, you will uncover more interesting tangents that lead to new design territory.

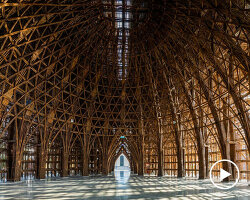

As an architect, I am also very interested in form and how to gain some control over it using AI systems like Midjourney. In my Vault Series, I used common typological and architectural descriptors to achieve some of the formal attributes I was hoping for in the output. I also used words associated with natural patterns and structures that might relate in some way to the architectural terms I was using in order to form synthetic hybrids that are not entirely natural or architectural, but reside somewhere in between. In my Text Series, I experimented with using letters to control form. Letters are made up of straights, angles, curves, arches, dots and bends, so by asking Midjourney to create images of buildings in the form of letters, you can expect certain formal conditions to appear in the imagery. Maybe it’s the academic in me, but every time I start a new prompt, I have an agenda and a set of questions I am trying to answer.

One drawback of Midjourney is that you can’t edit the prompt once you’ve started a thread. To change something or add additional text, you would have to edit the original prompt and run a new series. However, the images produced by Midjourney don’t require additional post-production, because things like lighting, saturation, and mood are all embedded in the images it creates. That said, you can easily manipulate these standard image files in any photo editing software.

vault series

DB: Speaking of visual vocabularies, did you have a rough vision of what this series would look like before starting? Were you surprised by the results?

KB: Midjourney is a creative AI, so the results will never be exactly what you expect. This is partly the result of this type of AI, which understands the context objects are typically found in, what parameters are often associated with certain object types, or even what other objects an object in your prompt is usually found with. So if you ask Midjourney to make an image of a building, it will likely add doors, windows, a street in the foreground, and a sky, without you having to include that detail in the prompt. Regardless of what you include in your text, Midjourney will always be filling in some blanks and adding detail that you might not have intended. This is also where it can be very exciting. By combining elements in the image output that you might not have considered with whatever you feed the AI, you often find yourself on tangents that are incredibly rich and often outside your comfort zone (usually a good thing). That said, if you want to be very specific about the building’s context (like placing it in a forest, rather than a city), you can include that information in the prompt. If you want it to be a cloudy sky, then specify that. If you want the doors to be made of spider webs, it can do that, you just have to type it in.

When I think about what parameters to include in my prompts, I do consider what objects Midjourney might add and whether I want to be more specific about them in my text. At the same time, I think about how these standard elements might be replaced with nonstandard alternatives to create new overlaps between otherwise unrelated elements. For example, in one series I described the roof of a building as a sponge. The output was exactly that; a building with a sponge for the roof. What Midjourney does so well, is that it doesn’t just erase the roof and plop a picture of a sponge in its place. Rather, it maps the structure of a sponge into the form of the roof, even molding it around things like windows and roof vents, so the two synthetically integrate with the overall form of the building.

vault series

DB: How does midjourney differ from DALL-E, which generates an image based on a short text input?

KB: DALL-E and Midjourney (and there are others — Disco Diffusion, Imagen, Stability AI) all use the same diffusion-based approach to generating images, but they also have very different features, and the output can vary dramatically. DALL-E, for example, has a great feature that allows you to erase part of an image and replace it with a new prompt. So, if you have a chair in your image and you want a clown sitting on it, you can erase a portion of the image, add text describing the clown, and DALL-E adds in a clown. DALL-E also lets you upload an image (Midjourney can do this too, but not as well), and with the click of a button, it will return three image variations. As a test, I uploaded a photograph of a real, built house I designed, and DALL-E gave me three very convincing variations with different roof forms and with windows and doors in new locations. Each image looked just like the original photograph. On the other hand, Midjourney gives you more control over the image-making process. It allows you to define the size and proportion of the image, how much leeway you want to give the AI (you can increase or decrease how much ‘style’ it gives the image, and you can control the amount of ‘chaos,’ or how far the AI deviates from your text). Midjourney is great as a tool for sketching and discovering entirely new design territory, while DALL-E is great for varying and editing an existing image. DALL-E, of course, also generates entirely new images from your text, but it doesn’t seem to be as geared toward speculative buildings and architecture, yet.

vault series

DB: Are there any similarities to parametric/algorithmic modeling tools like a Grasshopper plug-in?

KB: There is no doubt that these text-to-image AI’s will become an integral part of the design process, but they are an entirely new category of tool and don’t replace any of the tools we currently use as designers. I think the only overlap between Midjourney and parametric modeling tools is if a Midjourney user wants to create an image that has parametric qualities. You can include words like modular, repetitive, and parametric and they will affect the output to look somewhat like a building designed using a plug-in like Grasshopper. One interesting side note is that Midjourney does recognize images in its database that were created by various software programs, like VRay or Octane Render, and it will attempt to use the style of images made with them for the images it returns.

vault series

DB: Is the process more useful as a ‘working partner’ or a tool for creating rough and quick iterations?

KB: I often start a prompt with only a few words, just to see the effect one or two words might have on the output. I then add a few more words and run the prompt again. I do that until I am happy with the way certain words are mixing and how they are influencing the output. Once I settle on the text and other parameters of the image, like aspect ratio and the type of view (all words to include in the prompt), I vary and upscale over and over again. I probably do more variations than most people, but in my experience, the most compelling results are often discovered when following one thread for a long time. Moreover, once you get to a certain point in the lineage, it seems to start producing gem after gem, and it is well worth the time it takes to get there.

So yes, Midjourney is great for creating very quick iterations and testing ideas out before spending too much time on any one thread, but the images I post are usually days, or even weeks in the making. Of course, when compared to the sort of design process we are all used to, a few days is no time at all.

vault series

DB: Generally in architecture, designs are informed by site conditions and program — do these factors come into play when creating with AI?

KB: When I first started using AI, I found myself assigning programs to the buildings in the images. I think it was easier to think of these as images of fully formed, complete projects, but my feelings about that have changed. Now I title all my series according to the concept I am exploring with each prompt. I think it opens up more possibilities for what these can become when you think about them diagrammatically and don’t prescribe a use to them. As I’ve been translating some of these into 3d, it is rare that I make a model of the whole building anyway. It is usually a piece here and a piece there that are exciting, so I build those and scrap the rest. I am building a catalog of tons of potential building parts that I can then combine and shape into whatever site and program I am given for a real building project that I might be working on. I can already see the potential of having such a rich junkyard at my disposal, one that will continue to expand and connect to the junkyards of my friends and colleagues. It opens a whole new form of collaboration.

At the same time, Midjourney does understand the difference between different contexts and building programs. If you ask it for a hotel lobby, you will get interiors that have the feel of a hotel lobby. You can also describe the site. If it is somewhere very specific, like Times Square in Manhattan, then you can get pretty close to what you want, but it’s probably more useful to just describe elements of a building’s use or context and let Midjourney take it from there.

sponge series

DB: Is the result created as pure form with function to follow?

KB: I’ve always found the connection between function and form tricky and have never been satisfied with any of the common tropes, like form follows function. Of course, we try to match the two when a project is first designed and built, but that relationship is tenuous and often changes radically over the life of a building. A building’s form will only change if an owner decides to renovate. Function, on the other hand, is constantly changing regardless of —and often in spite of— an owner’s desire for it not to. If form is an apple, function is an orange. For that reason, I think it’s okay —even beneficial— to completely remove function from the equation when working with Midjourney and other text-to-image AI’s. I try to evaluate the images based on other criteria.

DB: Is this an exercise you would recommend to architecture/design students?

KB: This will be the question for many academics writing their syllabi for next semester. It will be exciting to see the various approaches — will studio assignments use AI as the seed for design projects, or will its use be banned? I suspect we will see a combination of both, but I am all for embracing the potential of these tools at any point in the design process. Text-to-image AI is so accessible and the output so quick, that I would recommend students use it for whatever they are working on, just to see what creative possibilities might be revealed. I wouldn’t, however, take these images as final designs. I would encourage using them as diagrams for spatial ideas that might apply to a bigger question at hand. Afterall, buildings are not just their form, there are plenty of other considerations to account for that Midjourney won’t touch.

sponge series

DB: Do the resulting images exist in purely two dimensions? If yes, are they translated into three dimensions? What is your strategy?

KB: Architects are trained to work between 2D orthographic drawings and 3D models, so this process of moving from 2D to 3D should be familiar to most designers. I think the ability to 3D model complex forms quickly will become even more useful in the coming years as we try to integrate these tools into our workflow (until the AI starts producing 3D models too). However, it’s not as easy as it seems. The 2D images created by Midjourney might look like a wide-angle photograph or rendering, but in reality, they are composites of multiple images that often have very different vanishing points or focal lengths. When you start trying to build 3D models of them, you quickly realize that they are actually impossible! That is another reason to think of these as sketches–inspirational material that still needs translation and refinement.

nature series

nature series

DB: On Instagram, Sebastian Errazuriz claims that AI is on track to replace artists (with illustrators the first to go). What are your thoughts?

I understand the concern. AI will undoubtedly change the way we practice in very fundamental ways, but I don’t think it will replace us. The same concern is raised every time a new, disruptive technology emerges, but artists and architects have persevered. In some ways, they have become even more invaluable, and more connected to contemporary culture and community. To me, it is an expertise question. Academic and professional experience will continue to impart a long history of knowledge that cannot be accessed by a lay person without years of study. AI also has access to these histories, but it’s unable to fully curate image selections because the audience and their preferences are dynamic and unpredictable. An expert is required to distill, identify, and shape our social perspectives and experience via the medium of their expertise.

I do think one major change to the way we will practice will be the need to take on a more curatorial role early in the design process. In some ways we already do this; it is extremely rare for an artist or architect to be truly original. There is always some seed of what has come before in every work. We are great at absorbing precedents by analyzing, transforming, and combining them into something new (original is not the same as new). AI has just made that even more explicit.

I also think about the role of curators in general, whether at a museum, or online, for a gallery or a journal. Their role is to create by identifying a collection of things that tell a story. We know that all curators are not the same, just as all conductors are not the same, so why would it not be the case that some (likely those with a deeper knowledge of art or architecture history, theory, and practice), are better at creating images using AI than others. Just as you would not want a public vote to determine what art a museum should exhibit, you would not want people without architectural knowledge to design and construct a building using AI. I am all for dabbling–and I can only speak for architecture–but excluding architects from the building design process would not end well for the client or our communities.

nature series

DB: are you optimistic about a future with AI?

KB: AI is already here, so I try to remain optimistic. There are some major issues we need to tackle with bias, labor, and energy, to name just a few. If we stay on the sidelines, we will have little control over how AI develops and how it is used in our respective fields. With all the risks, there will also be great opportunities. We will be able to collaborate, not only with machines, but with other professionals, researchers from other disciplines, and with clients in altogether new ways. I am extremely hopeful that AI will unlock a whole new ecosystem of creativity that is open, shared, and expansive.

nature series

happening now! swiss mobility specialist schindler introduces its 2025 innovation, the schindler X8 elevator, bringing the company’s revolutionary design directly to cities like milan and basel.